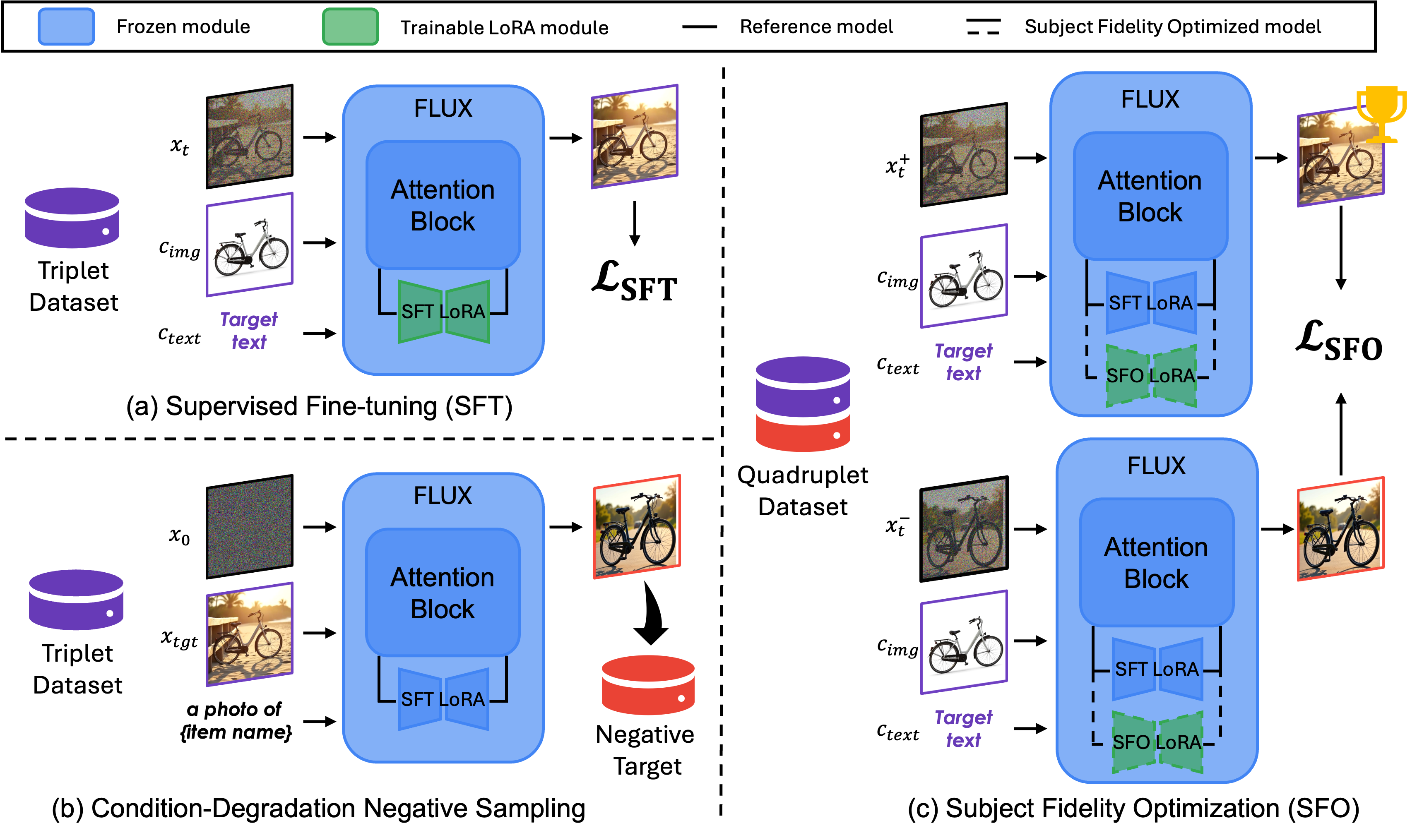

We present Subject Fidelity Optimization (SFO), a novel comparative learning framework for zero-shot subject-driven generation that enhances subject fidelity. Existing supervised fine-tuning methods, which rely only on positive targets and use the diffusion loss as in the pre-training stage, often fail to capture fine-grained subject details. To address this, SFO introduces additional synthetic negative targets and explicitly guides the model to favor positives over negatives through pairwise comparison. For negative targets, we propose Condition-Degradation Negative Sampling (CDNS), which automatically produces synthetic negatives tailored for subject-driven generation by introducing controlled degradations that emphasize subject fidelity and text alignment without expensive human annotations. Moreover, we reweight the diffusion timesteps to focus fine-tuning on intermediate steps where subject details emerge. Extensive experiments demonstrate that SFO with CDNS significantly outperforms recent strong baselines in terms of both subject fidelity and text alignment on a subject-driven generation benchmark.

Conventional zero-shot, subject-driven text-to-image (TTI) approaches use supervised fine-tuning (SFT) on triplets—reference image, target text, and target image—so the model implicitly imitates the subject without clear guidance on which attributes matter. Subject Fidelity Optimization (SFO) replaces each triplet with a quadruplet by adding a deliberately low-fidelity negative target. The negatives are generated from supervised fine-tuned model by Condition-Degradation Negative Sampling (CDNS), which intentionally degrades both the reference image and the prompt to amplify failure cases and generate diverse counter-examples. Training then employs a pairwise preference objective that drives the model to score the positive higher than its paired negative, while logit-normal timestep sampling concentrates learning on denoising stages where subject details emerge. This synergy of CDNS, comparison learning, and timestep reweighting sharply improves subject fidelity and maintains text alignment, outperforming previous zero-shot methods without human-labeled rewards or per-subject tuning.

SFO demonstrates high-quality zero-shot subject-driven text-to-image generation, achieving both strong subject fidelity and accurate text alignment through comparison-based learning.

Our SFO shows substantial quality gains over various zero-shot subject-driven text-to-image methods, particularly in fine details. It also outperforms our supervised-fine-tuned base model, OminiControl, thereby highlighting the necessity of comparison-based learning.

@misc{shin2025negativeguidedsubjectfidelityoptimization,

title={Negative-Guided Subject Fidelity Optimization for Zero-Shot Subject-Driven Generation},

author={Chaehun Shin and Jooyoung Choi and Johan Barthelemy and Jungbeom Lee and Sungroh Yoon},

year={2025},

eprint={2506.03621},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2506.03621},

}